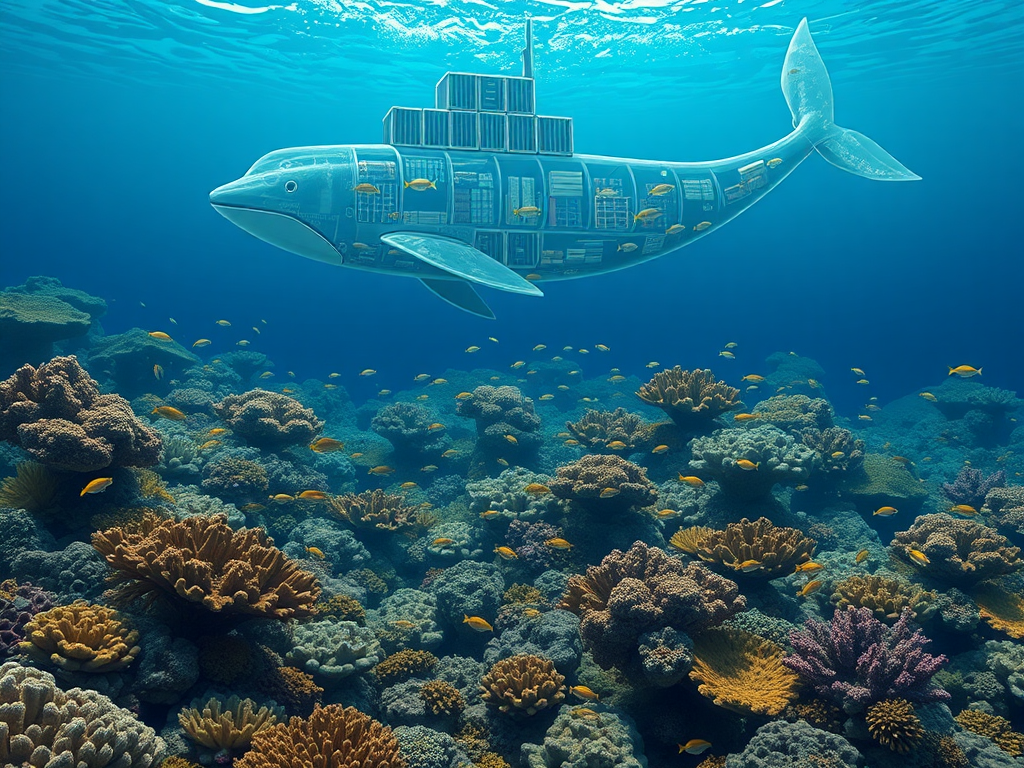

Introduction

Have you ever faced a situation where there's not enough memory when handling large amounts of data? Or when writing code, have you wished for a more elegant way to implement iteration? These issues can be resolved using Python generators. Today, let's discuss this powerful yet often overlooked feature.

Basic Concepts

What are generators exactly? Simply put, generators are a special type of iterator in Python that allow us to "generate data on demand," rather than loading all data into memory at once.

Here's a simple example:

def number_generator(n):

current = 0

while current < n:

yield current

current += 1

for num in number_generator(5):

print(num)

Deep Understanding

Let's understand how generators work through a more practical example. Suppose we need to process a huge file where each line is a piece of user data:

def read_user_data(file_path):

with open(file_path, 'r') as file:

for line in file:

# Clean each line before processing

cleaned_line = line.strip()

if cleaned_line:

yield cleaned_line.split(',')

for user_data in read_user_data('users.txt'):

name = user_data[0]

age = user_data[1]

print(f"Processing user: {name}, Age: {age}")

Practical Applications

Generators can be useful in many scenarios. Personally, I most often use them when handling large datasets. Did you know? Using a regular list to handle a million pieces of data might require hundreds of MBs of memory, while using a generator might only need a few KBs.

Here's an example of processing large amounts of data:

def process_large_dataset(data_generator):

total = 0

count = 0

for value in data_generator:

total += value

count += 1

# Print progress every 1000 pieces of data processed

if count % 1000 == 0:

print(f"Processed {count} pieces of data")

return total / count if count > 0 else 0

def number_stream(n):

for i in range(n):

yield i

average = process_large_dataset(number_stream(1_000_000))

print(f"Average: {average}")

Usage Tips

When using generators, there are some tips to make the code more elegant. For example, you can use generator expressions, which are a more concise syntax:

squares = (x*x for x in range(10))

def numbers():

for i in range(10):

yield i

def squares():

for n in numbers():

yield n * n

def evens():

for n in squares():

if n % 2 == 0:

yield n

Performance Comparison

Let's do a simple performance test comparing the use of generators and lists to handle large amounts of data:

import memory_profiler

import time

@memory_profiler.profile

def using_list():

return list(range(1_000_000))

@memory_profiler.profile

def using_generator():

return (x for x in range(1_000_000))

start_time = time.time()

numbers_list = using_list()

print(f"List time: {time.time() - start_time:.2f} seconds")

start_time = time.time()

numbers_gen = using_generator()

print(f"Generator time: {time.time() - start_time:.2f} seconds")

Real-World Case

Let's look at a more complex real-world application case - using generators to process large log files:

import re

from datetime import datetime

def parse_log_file(file_path):

"""Generator function to parse log files"""

error_pattern = re.compile(r'\[ERROR\].*')

with open(file_path, 'r') as f:

for line in f:

if error_pattern.match(line):

# Parse date and error message

date_str = line[1:20]

error_msg = line[28:].strip()

try:

date = datetime.strptime(date_str, '%Y-%m-%d %H:%M:%S')

yield {

'date': date,

'message': error_msg,

'severity': 'ERROR'

}

except ValueError:

continue

def analyze_errors(log_path):

"""Analyze error logs"""

error_count = 0

errors_by_hour = {}

for error in parse_log_file(log_path):

error_count += 1

hour = error['date'].hour

errors_by_hour[hour] = errors_by_hour.get(hour, 0) + 1

# Display processing progress in real-time

if error_count % 100 == 0:

print(f"Processed {error_count} error records")

return errors_by_hour

Summary and Reflection

Through today's study, we have gained an in-depth understanding of the working principles and application scenarios of Python generators. Have you noticed that generators not only help us save memory but also make the code more elegant and readable?

I personally think that generators are one of Python's most elegant features. They perfectly embody Python's design philosophy: simplicity is beauty.

Have you encountered scenarios in your work where generators can optimize the process? Feel free to share your experiences and thoughts in the comments.

Remember, programming is not just about implementing functionality; it's also about pursuing elegance and efficiency in code. Generators are a powerful tool to help us achieve this goal.

Further Reading

If you're interested in generators, consider exploring the following related concepts: - Coroutines and async/await syntax - Generator tools in the itertools module - Combining context managers with generators

These topics are closely related to generators, and mastering them can take your Python programming skills to the next level.

Previous

Previous