Foreword

Have you ever encountered situations where your Python application runs perfectly fine locally but encounters various issues when deployed to a server? Environment dependencies mismatches, version conflicts, configuration inconsistencies... These problems can be quite frustrating. In fact, these are exactly the core issues that containerization technology aims to solve. Today, let's dive deep into the containerization practices for Python applications.

Pain Points

I remember the troubles I had when I first started Python development. Once, I developed a data analysis application using libraries like pandas and numpy. Everything worked fine in local testing, but when deployed to the test server, various dependency conflicts emerged. Most critically, the server was running other Python applications, each with their own dependency requirements. In such cases, even virtual environments didn't seem to be a perfect solution.

Have you encountered similar issues? Let's see how containerization thoroughly solves these problems.

Environment

The core advantage of containers is providing an isolated, consistent runtime environment. Imagine your Python application living in its own "small house" that contains everything the application needs, and no matter where you move this house, the environment inside remains exactly the same.

In practice, I've found that a good Python application containerization solution should include these key elements:

First is the choice of base image. I recommend using official Python images as the base, such as python:3.9-slim. This image is much smaller than the full version but contains all core components needed to run Python applications.

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["python", "app.py"]

However, if your application is relatively complex, I strongly recommend using multi-stage builds. This is an important technique I learned when optimizing container image size:

FROM python:3.9 AS builder

WORKDIR /app

COPY requirements.txt .

RUN pip install --user -r requirements.txt

FROM python:3.9-slim

WORKDIR /app

COPY --from=builder /root/.local /root/.local

COPY . .

CMD ["python", "app.py"]

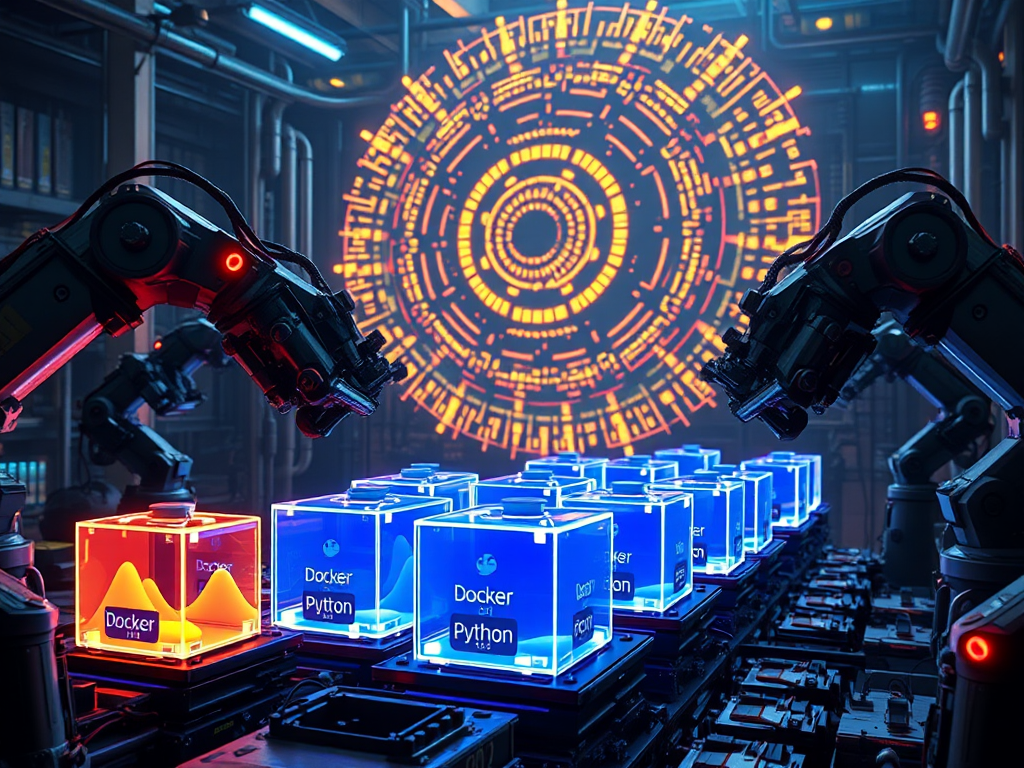

Practice

In real projects, I've found that containerizing Python applications requires attention to many details. Let me share some practical insights:

- Dependency Management

To solve dependency issues, simply copying requirements.txt isn't enough. I suggest using pipenv or poetry to manage dependencies, ensuring precise control over dependency versions. Handle it like this in your Dockerfile:

COPY Pipfile Pipfile.lock ./

RUN pip install pipenv && pipenv install --system --deploy

- Health Checks

Health checks are particularly important as they help us detect application issues promptly. I usually add this to my Dockerfile:

HEALTHCHECK --interval=30s --timeout=3s \

CMD python -c 'import requests; requests.get("http://localhost:8000/health")' || exit 1

- Log Handling

Log handling requires special attention in containerized environments. I recommend outputting logs to standard output:

import logging

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

)

Scaling

When applications need to scale, Kubernetes is a good choice. I typically configure Python application deployments like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: python-app

spec:

replicas: 3

template:

spec:

containers:

- name: python-app

image: python-app:1.0

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

readinessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 5

periodSeconds: 10

In actual operation, I've found that setting appropriate resource limits is particularly important. Python applications can sometimes be casual with memory usage, and setting proper limits can prevent one container from affecting others' operation.

Reflection

Through years of practice, I increasingly feel that containerization isn't just a deployment technology, but a transformation in development thinking. It forces us to consider application portability, environment consistency, and resource isolation during development.

For example, when developing new features now, I always consider: How will this feature perform in a containerized environment? Will it bring additional resource overhead? Is the configuration flexible enough? This mindset has helped me write better code.

What do you think? What insights have you gained while using containerization technology? Feel free to share your experiences in the comments.

Let's continue discussing more details about Python application containerization. I've always believed that technological progress comes from continuous practice and communication. Everyone's experience is unique and worth sharing and learning from.

Summary

Containerization technology has fundamentally changed how Python applications are deployed. It not only solves environment consistency issues but also provides good scalability and resource isolation. However, to use this technology well, we need to establish a containerization mindset during development and consider how applications will perform in containerized environments.

Do you now have a new understanding of Python application containerization? If you're considering containerizing your applications, I hope these shared experiences provide some inspiration.

Previous

Previous